Building a magic wand with an IR camera

Building a magic wand with an IR camera

A lot of my projects start with a thought.

Wouldn’t it be fun if…..

… I had a wand that I can use to trigger homeautomations. YES IT WOULD. That’s it. Thats the motivation.

Figuring out how to do it.

My initial idea included a gyrosensor/accelerometer included in a wand. However, this would take a battery and a microcontroller. Even though extracting gestures should be pretty easy this way, there is no way for me to fit all of this into a wand. So, this is not the way.

I move on to my favorite topic in university. Computer vision. What if I used a camera to track the wand and draw a path this way? I have a spare Raspberry pi zero and a matching camera. The pi zero does not have a lot of power, but maybe I can make it work anyways. Before I can start on this, I remember a seminar from university. We used FIM Imaging to track daphnias. FIM Imaging uses frustrated total internal reflection. IR LEDs shine into acrylic glass. The larves on top of the glass reflect the infrared light into an IR camera. This way, only the larves are visible. Reflecting infrared into a camera seems like a good approach.

So I impulse bought an IR camera for my raspberry pi and an IR filter. Additionally, I took a reflector from by bike to use as a wand. My new goal: Make the tip of the wand reflective. With the IR filter I could block all light except infrared from the camera. The reflective wand would then be a bright spot which I can easily track. Well, it worked. But far worse than I hoped.

For the time being I worked on my pc. I simply streamed the video from the pi zero using mjpg_streamer. Once I managed to run everything on the pi, I removed mjpg_streamer and read the frames directly via opencv.

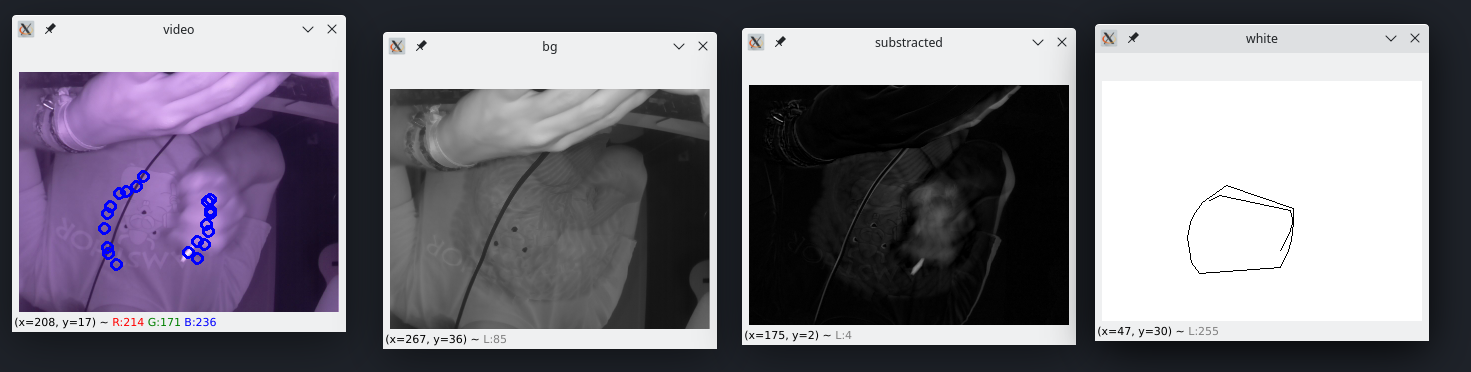

So, I read the frame, convert it to grayscale and apply background substraction. This way I can remove bright spots like my ceiling lamp from the image. Afterwards I apply a gaussian blur and use minMaxLoc()from openCV to find the brightest spot in the image.

For now, I set an arbitrary brightness threshold of 130. I keep the last 20 points and discard old points. This way, I can draw a line. Of course, there is a lot more to optimise here, but this will be done later (TM). The result can be seen here:

Classifying gestures

Now to the next big question. How do I match my lines to predefined gestures?

Initially, I played around with matchShapes() from openCV and achieved no acceptable results.

I was sure that I could use tensorflow or PyTorch to do it, but there would be little chance to run this on a Pi Zero W. Anyways, I wanted a first result. So there we go.

I spend the next hour recording gestures and labeling them. I then trained the following network

model = Sequential()

model.add(Conv2D(32, (2, 2), input_shape=input_shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(32, (2, 2)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (2, 2)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(1))

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])

The models works, but I noticed a lot of problems.

First: mjpg_streamer stutters badly on my pi. And I rely heavily on a steady framerate to detect gestures. In the example images it is clear, that there are heavy stutters. I tried to draw a circle. This leads to problems with classification.

Anyways, I added a webhook to homeassistant to turn a lamp on and off. Now I had a working prototype!

The next day

My brain kept on rumoring on how to improve performance. I did not want to use machine learning for this, as it seemed pretty overkill. What if I just defined gestures as a set of points. I could then take the direction I go from the first to the second point and compare it to my gestures. After I have multiple points, I can take the best result. Maybe it can work.

Another thing that pops up in my mind: IS THIS THE TIME? Should I learn Rust? Is it a use case? Since I’ve wanted to meddle with rust for a long time, I will give it a try.

However, before I jumped into action another possibility arose. My girlfriend watched a video of the Harry Potter film park, where they use similar technology. While watching, she exclaims “Oh, the gesture is just a 1”. Another gesture seems to be a 4.

This looked familiar. Is it just the MNIST database? That would make training my own model completely unnecessary. There has to be prebuilt stuff that I can just use. It looks like multiple gestures are just numbers or letters. I decided to just focus on numbers for now, so I could use MNIST. So I reorganized my thoughts:

- First, check if there is a usable MNIST implementation in Rust

- If not, first do a test run in python, since I know python lot better than rust

- If this works, try to recreate it in rust

- If not: Try my approach of matching the points.

After a bit of research I found an implementation by mauri870 on Github. It uses the Fast Artificial Neural Network Library library. Since there is little work involved, I decided to give it a try and started training the model. If it proves to be fast and accurate enough, I should be able to handle the rest with ease, since I already knew that rust bindings for OpenCV do exist. Training did take a while, so I continued working on a paper for university.

After the model trained, I realized that I needed a way to classify my own images, not just the test images. After reading through the code for a bit, I decide to message mauri. He quickly respondsled, and recommended me to not use FANN for this and search for Tensorflow bindings.

Following more trial, search and error I once again rearrange my thoughts.

Recognizing gestures - V2

Why did I go for a complicated solution for all of this? For now, I only needed to recognize one gesture. We settled for a ‘1’ as the trigger and that is a very easy pattern. That’s a problem that does not need machine learning.

Flashbacks to a Professor who teaches CV: Machine learning is a hammer, but not everything is a nail.

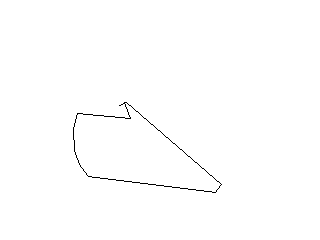

So I went back for my idea that I ignored: Define gestures as multiple vectors with a certain direction.

A ‘1’ is made up of two parts: A diagonal line and a straight line. I calculate a direction for the current angle of movement and draw a few lines in the air to get a feeling for the angles. I take note of the two directions important for a ‘1’. Now I could work on recognizing a gesture.

As long as there is no strong deviation from the current direction, each new point is seen as part of the current line. When there is a strong change in direction, a new line is started and so on. Now I have a list of directions, which I could use to match a set of gestures to the movement of the wand. For each new entry I check for gestures with the same length as the currently recognized lines. For those, the difference between the direction of the defined and measured line is calculated. If the difference is below a set threshold, the next entry is checked. Additionally, the length of the recorded lines is checked. This prevents some false positives, since there may be a lot of jittering when holding the wand still.

After ‘some’ tinkering with the thresholds, I managed to get some semi-reliable results and since we wanted to use this for a Halloween party (yes, this has been in work for quite some time) I needed to get some results quickly. So I was content with a single gesture.

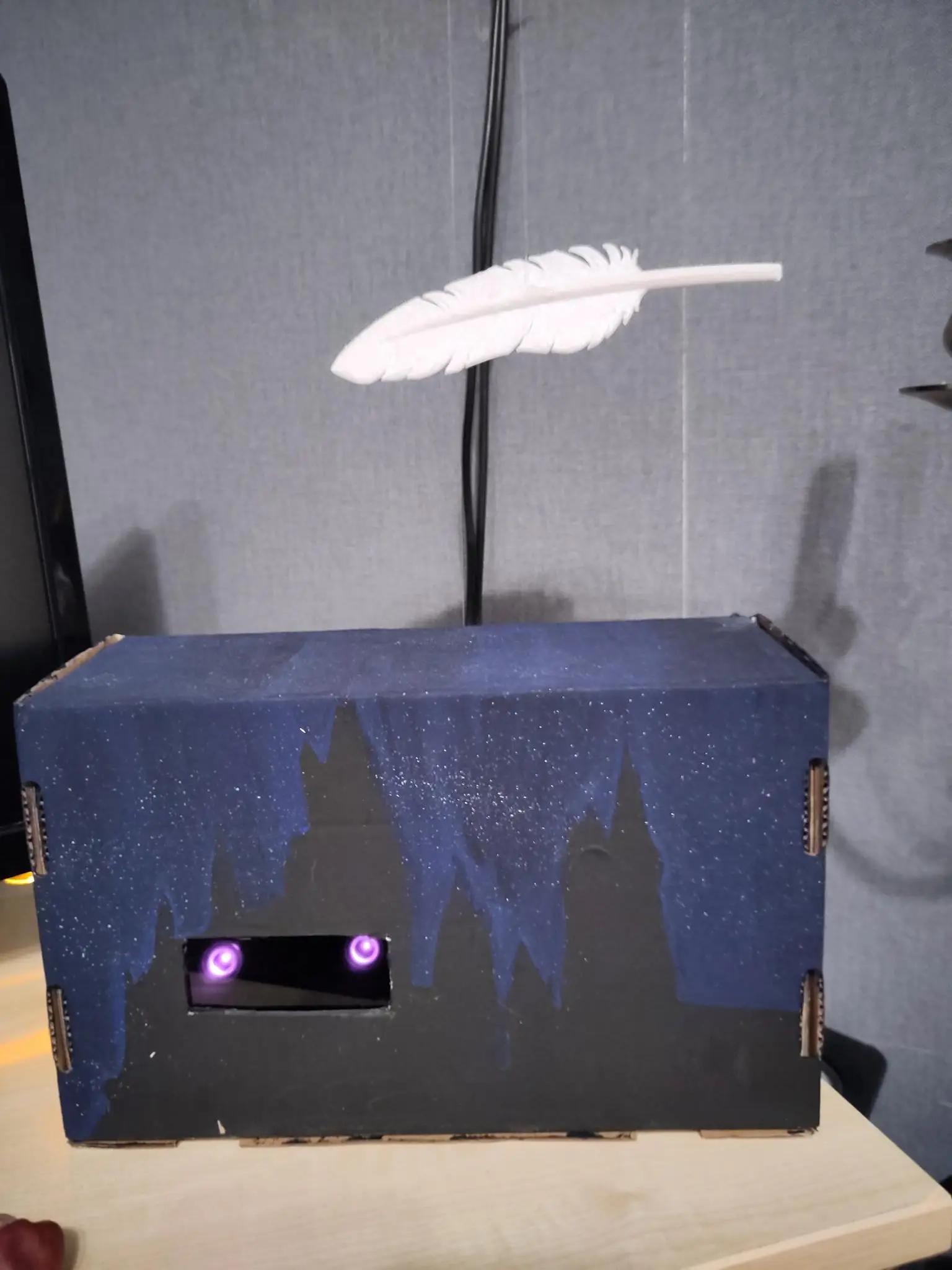

Reacting to a gesture

Recognizing a gesture is not everything that needed to be done. Without a proper reaction, it is pretty useless. I 3D printed a feather (a normal feather is far to lightweight) and my girlfriend painted a box with a Hogwarts silhouette so I had a place to hide everything. I put the pi zero, a motor and a driver for the motor in there. For the motor I printed a spool with one big flaw: The parts on the side were much to small, so I had to extend them using cardboard. I then filled the spindle with a nylon thread and lead it through a loop and glued it to the feather.

When a gesture is recognized, the motor is turned for a few seconds, waits and is then turned back for the same amount of time. Additionally, The WLED lamp on the wall is trigger for the same amount of time. I’m pretty impressed with the results, but I will need to optimize the code in the future to remove some of my shortcuts. Maybe I’ll find the time to rewrite the code in Rust. Maybe. I say that too much…

Anyways: Here is my result: